Sourcing Cookbook: A Recipe to Save Time and Increase Conversion Rates

I enjoy automating my sourcing workflow. In this article, I will share my sourcing cookbook recipe which will lead to an increase in conversion rates and reduce the man-hours of manual tasks.

Let’s find some Solution Architects, shall we?

Step 1. LinkedIn

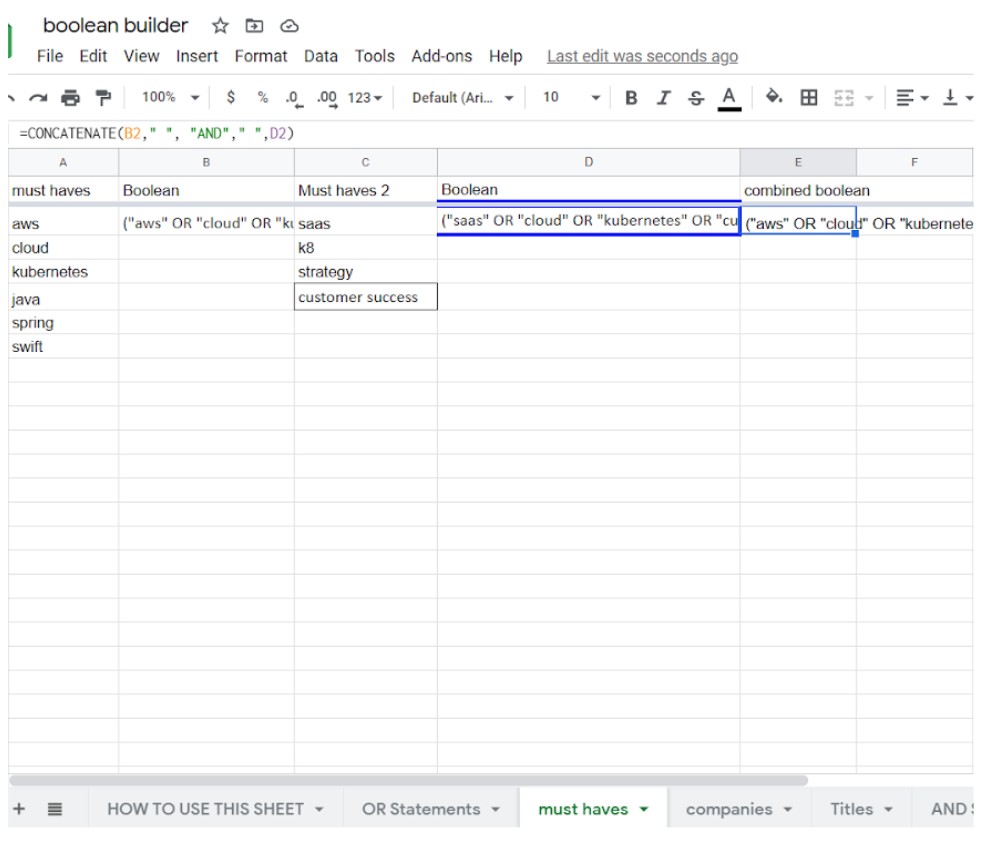

We can use an awesome Boolean builder. In this example, I’m using the one from Mike Cohen.

Once you have your preferred Boolean lined up and combined with the “Concatenate” function, it’s just a matter of copying it in the keyword section in Linkedin.

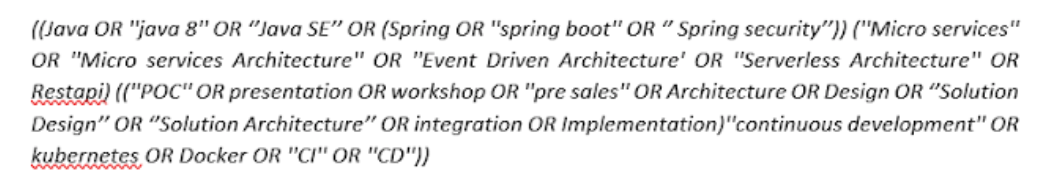

I’m using my own Boolean string in my keyword section of LinkedIn:

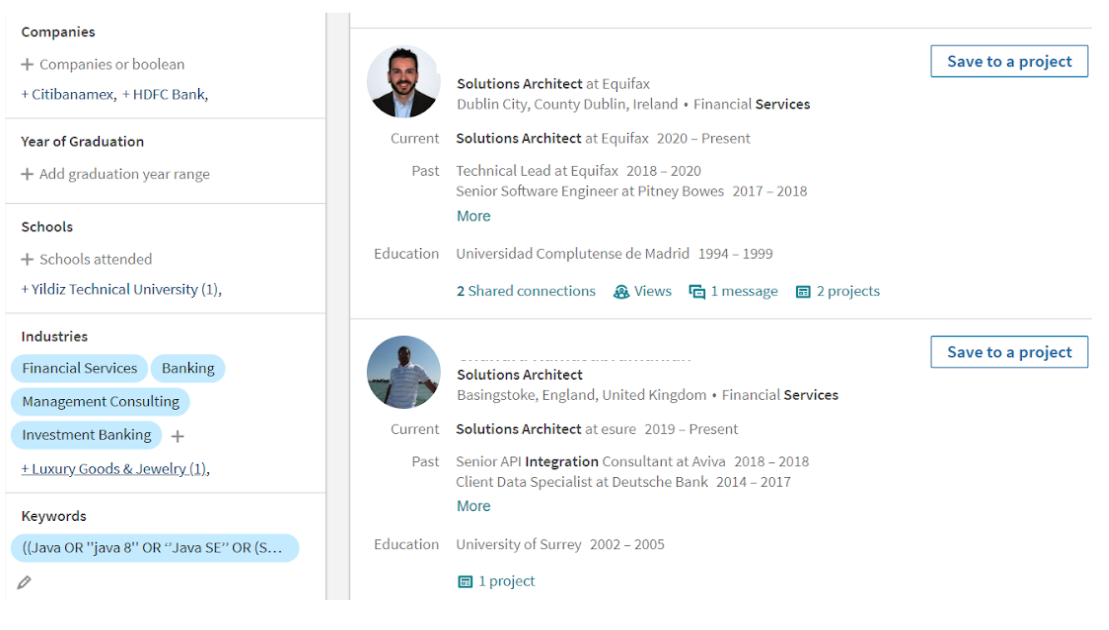

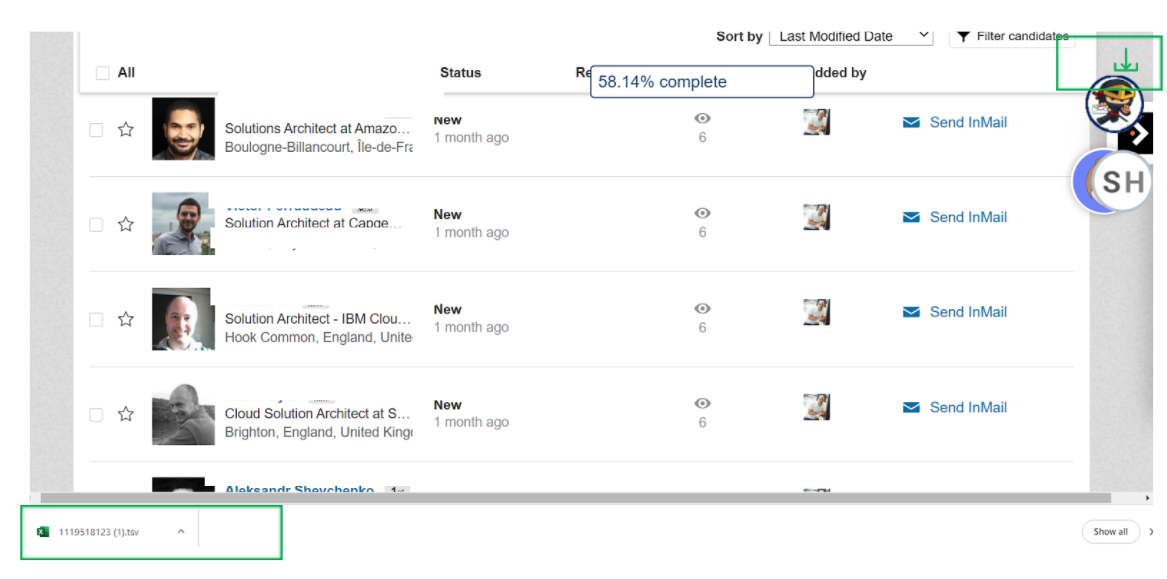

And here’s the result:

Step 2. Scraping and Enriching Data

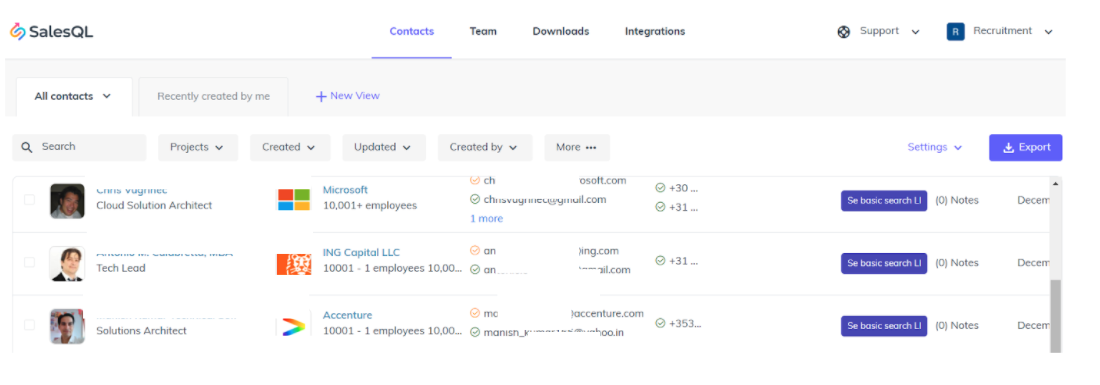

What’s next? I’m using two different tools, Quickli* and SalesQL. Both scrapes instantly the shortlist from the LinkedIn result above.

Why scraping? Simply to be time-efficient. Additionally, reaching out via an InMail shows less conversion rates (average of 18%) compared to using an email campaign.

I make sure that the data is cleaned up and is copied to values in a neat CSV.

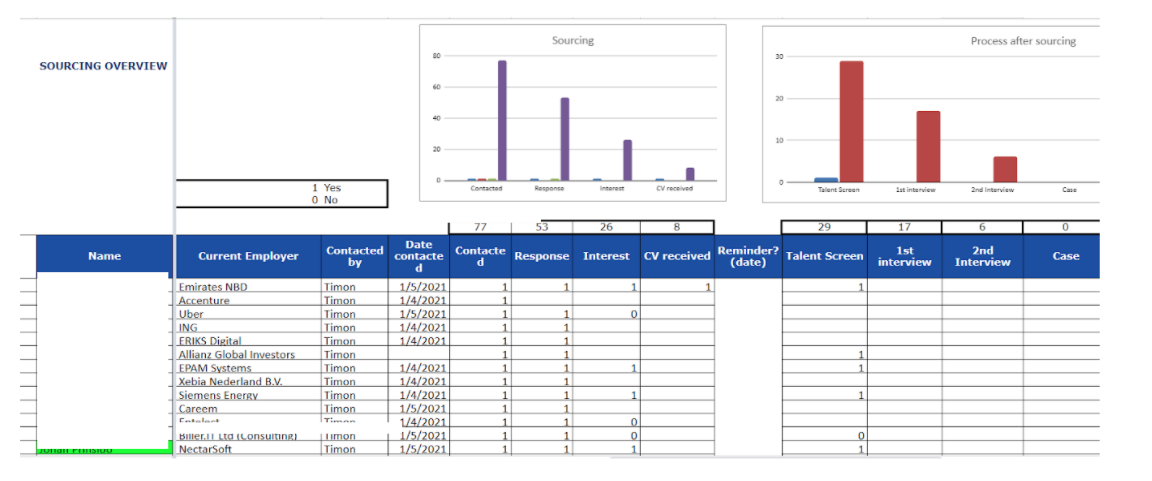

Once I scrape the data, I upload it into my own Google spreadsheet and enrich it as shown below. I use this spreadsheet to keep track of the sourcing funnel and make it visible to the hiring managers.

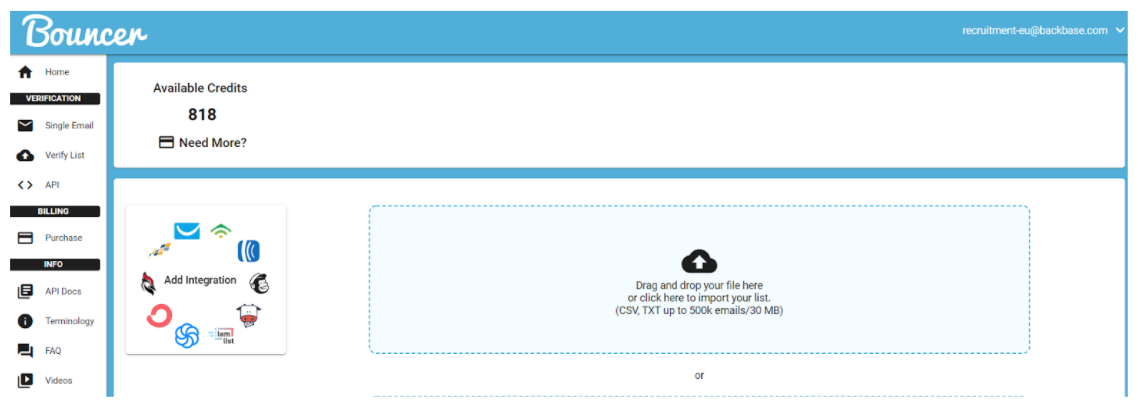

Then, I use contact finder tools such as Contact-out, Kendo, and Swordfish. You will still need to check the email validity with an email verification tool. Personally, I’m using Clearbit or Bouncer to check a whole list of candidates in one go.

Or you could use Clearbit or an online verifier: http://email-verifier.online-domain-tools.com/.

Another option that is more time effective is using SalesQL. In comparison with Quickly, SalesQL automatically populates it with e-mail addresses. To be fair, SalesQL did get me often only 60-70% of the correct e-mail addresses. So, it is important to always check your email deliverability.

For the remaining 30-40% undeliverable emails from SalesQL, I will use Contact-Out since their e-mail deliverability is around 90-95%.

Step 3. Reach-outs

Now, it’s time to drive the e-mail campaign.

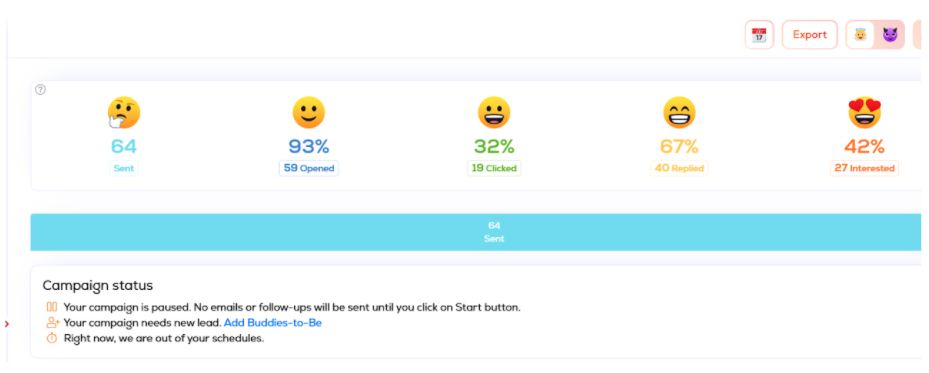

I prefer to use Lemlist instead of reaching out to candidates one-by-one. By doing so I save time and I keep track of all my activities in combination with using my own master sheet.

Have a look at this useful tutorial of Lemlist.

I created aliases for all my hiring managers. I can create this easily at the Email Provider Settings.

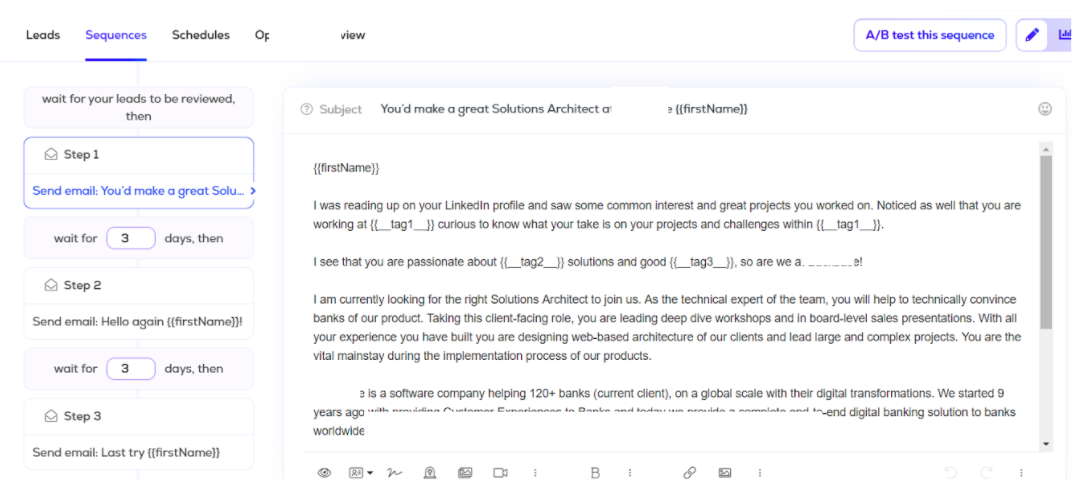

Once I imported my shortlisted candidates in Lemlist, I created a “personalized” message in sequences as if I’m the hiring manager. This will increase my chances for a higher conversion rate.

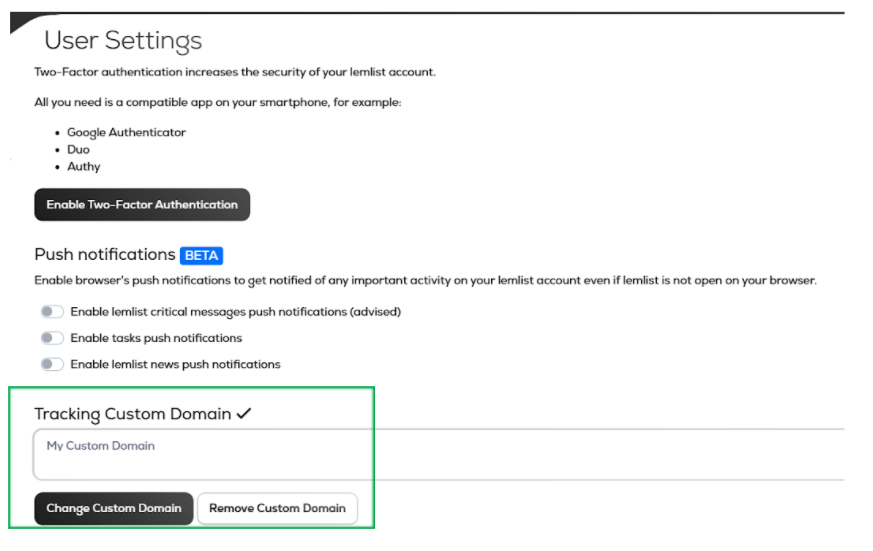

To avoid the email campaign ending up in the SPAM boxes, I ask my ICT support team to give me the Tracking Custom Domain of the company I work for.

As a result, I have gone from 18% conversion rate to 42%. The best part is that I cut out some manual tasks.

Step 4. Site Searches

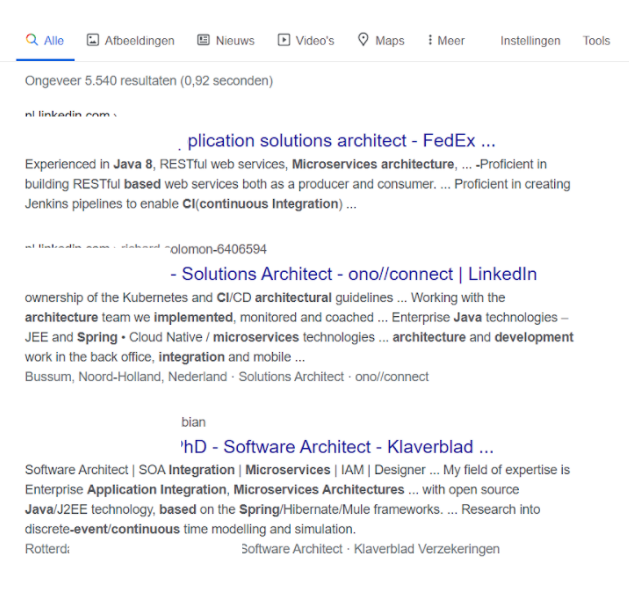

I’m leaving LinkedIn and I’m searching via Google.

In this example I have built this string:

site:linkedin.com/in -inurl:dir intitle:”architect” (”Microservices” OR ”event driven architecture” OR ”Microservices architecture”) (”springboot” OR ”java 8” OR spring) (”implementation of CI” OR ”implementation of CI” OR ”continuous development” OR ”continuous integration”).

Since the multiple OR statements are sometimes counterproductive, I also try site search:

site:Linkedin.com/in -inurl:dir intitle:”Solution Architect” (Developed in java|spring|”Microservices”) -jobs -job -apply -sample

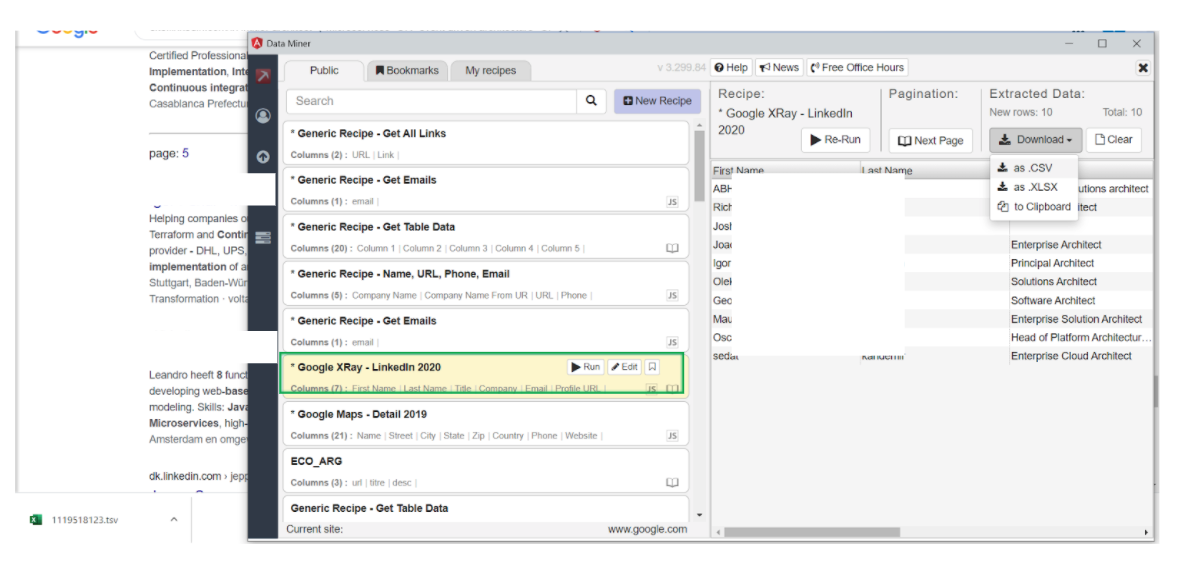

Once I have the search result, I use Dataminer to scrape everything in one go. I can use one of the recipes in the Dataminer’s Public Library and I can download the results as a .csv file.

I will upload the results into the master sheet I’m using, enrich it, and start e-mail campaign via Lemlist.

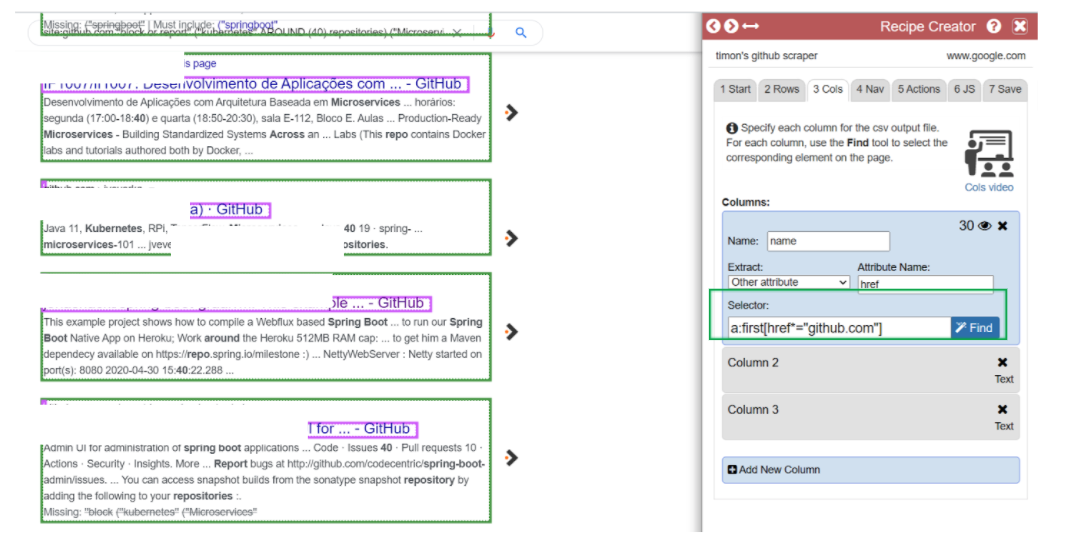

Step 5. GitHub Search and Automate Enrichment

In this example I use the following:

site:github.com ”block*report” (”Springboot” AROUND (40) repositories) (”Microservices” AROUND (40) repositories) (”Kubernetes” AROUND (40) Repositories) -inurl:followers -inurl:following

Alternatively, people could use this to get even more diverse results:

site:github.com ”block*report” java “spring boot” ”microservices architecture” -inurl:following -inurl:followers

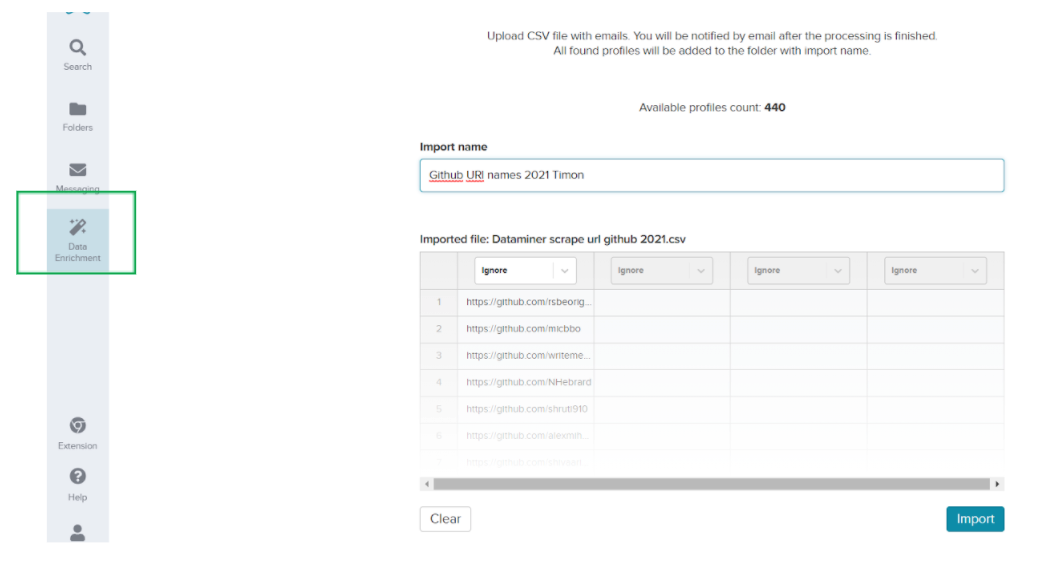

This time I only want to scrape all the GitHub user names using Dataminer. Accordingly, I need to tweak the recipe by revising the selector (in the column section) to get the desired results.

I use the following a:first[href*=’’github.com’’]** (Credits to Bret Feig)

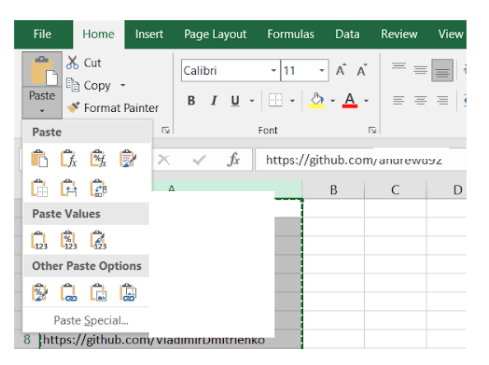

After downloading the results, I make sure that the data is cleaned up and copied to values in a neat .csv file. Then I use Amazing Hire to upload my results and to automate my cross-reference and enriching part.

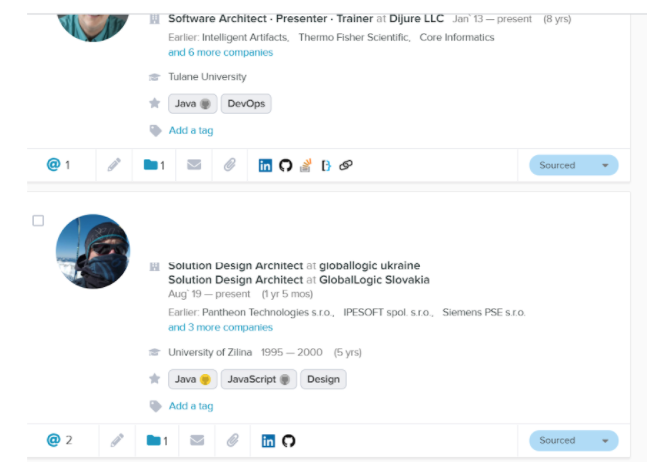

Look! Amazing Hire showed me the candidates I was searching for. I can easily look at their social footprints or enrich my data. I will export the file and upload it again in my master sheet with the enriched data. By using the same steps as before, I have saved a lot of time by cutting out a lot of manual tasks.

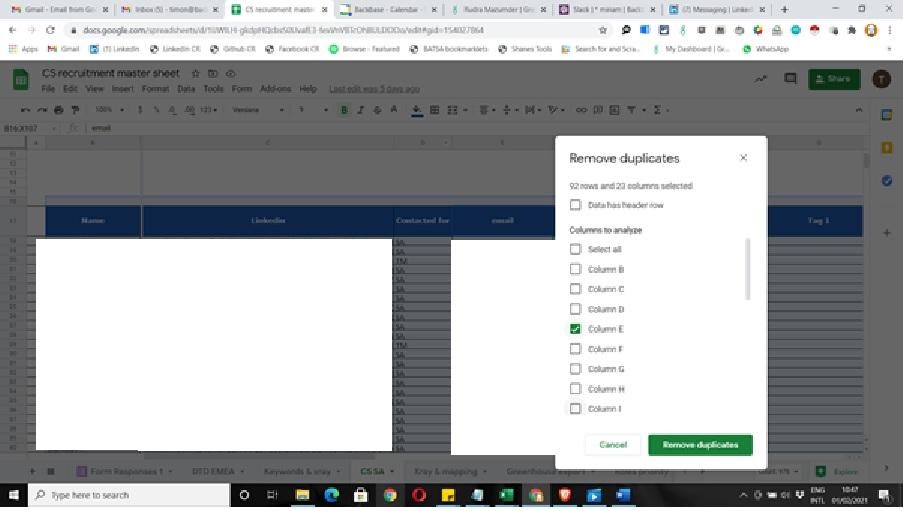

Step 6. Remove Duplicate Data

I will make sure I remove duplicate data to avoid sending the same email twice. Since I’m working with Google sheets, I can remove the duplicates under the data tab after selecting the column.

Et voila! Now you can make your own delicious time-saving recipe.

Authors

Timon Krommendam

Timon Robert Krommendam is currently working as a contract Technical Sourcer at Backbase. He’s very passionate about (behavioural) sourcing, OSINT, and automation; big fan of CSEs, web scraping (webscraper.io), and using common sense.

Recruit Smarter

Weekly news and industry insights delivered straight to your inbox.